Implementing continuous blue/green deployments on Azure Container Apps by using GitHub Actions

Microsoft Azure has a new Serverless Application Platform for Containers available in addition to a couple other nice improvements around GitHub Actions and Azure Monitor. Therefore I wanted to revisit the quite complicated scenario of performing continuous blue/green deployments on Kubernetes and Azure and show you how to implement it with the new technology stack. If you did not have a chance to play with Container Apps and want to try it out by yourself you have come to the right place.

Update December 1st 2022. Azure Container Apps deployment process is now respecting readiness probes and will only promote a new revision after it has signalled to be healthy. That will make simple zero-downtime deployments easier to implement. See https://learn.microsoft.com/en-us/azure/container-apps/application-lifecycle-management#zero-downtime-deployment

We will make use of the following new features:

- Azure Container Apps as runtime for our containers

- Builtin Dapr for solving service-to-service invocation inside the cluster

- Builtin Dapr components for leveraging Redis Cache without the SDK

- Builtin Keda for automatically scaling containers based on traffic

- Builtin Envoy for implementing traffic splits between revisions

- Builtin Distributed Tracing in Application Insights

- GitHub Actions with Federated Service Identity support for Azure

The objective is to implement blue/green deployments and validate how much easier a no-downtime continuous deployment process is in Azure Container Apps when using GitHub Actions. Of course you can use other CI/CD toolchains of your choice, since most of the logic is implemented in shell scripts you should easily find a way to move the process over.

I have written a demo application which is implementing prime factor calculations in a very complicated and distributed fashion. Our application is making use of Dapr for caching and service to service invocation and consists of a backend and a frontend service to simulate a little bit of realistic complexity. The code, scripts and instructions on how to set it up are part of my GitHub repository . The easiest way to reproduce the demo is to fork the repo and try out the scenario in your own Azure environment.

In the following I want to give you some insights on how it works.

The very first thing you need to do is forking the repo in order to set up your own GitHub Actions pipelines. You might have heard that GitHub and Azure have upgraded their trust relationship capabilities — you can read more about this here. Effectively this means you can now grant your pipelines in your GitHub repo the permission to exchange a token from GitHub for an Azure AD token and thereby interact with your Azure resources without the need for sharing client secrets -a huge improvement.

The pipelines in my repo assume that you have created an app registration and granted it “Contributor” permissions on a dedicated resource group in your Azure subscription which can ensure by executing the following commands:

DEPLOYMENT_NAME="dzca11cgithub" # here the deploymentRESOURCE_GROUP=$DEPLOYMENT_NAME # here enter the resources groupLOCATION="canadacentral" # azure region canadacentral or northeuropeAZURE_SUBSCRIPTION_ID=$(az account show --query id -o tsv) # here enter your subscription idGHUSER="denniszielke" # replace with your GitHub user nameGHREPO="blue-green-with-containerapps" # here the repo nameAZURE_TENANT_ID=$(az account show --query tenantId -o tsv)az group create -n $RESOURCE_GROUP -l $LOCATION -o noneAZURE_CLIENTID=$(az ad sp create-for-rbac --name "$DEPLOYMENT_NAME" --role contributor --scopes "/subscriptions/$AZURE_SUBSCRIPTION_ID/resourceGroups/$RESOURCE_GROUP" -o json | jq -r '.appId')AZURE_CLIENT_OBJECT_ID="$(az ad app show --id ${AZURE_CLIENT_ID} --query objectId -o tsv)"az rest --method POST --uri "https://graph.microsoft.com/beta/applications/$AZURE_CLIENT_OBJECT_ID/federatedIdentityCredentials" --body "{'name':'$DEPLOYMENT_NAME','issuer':'https://token.actions.githubusercontent.com','subject':'repo:$GHUSER/$GHREPO$GHREPO_BRANCH','description':'GitHub Actions for $DEPLOYMENT_NAME','audiences':['api://AzureADTokenExchange']}"

If you want to grant these permissions manually you need to allow your GitHub repository to interact with your Azure AD Tenant. Go to “Azure Active Directory” -> “App registrations” -> “YourApp” -> “Certificates & secrets” -> “Federated credentials” and allow the pipelines that are triggered in the main branch to exchange a token within the security context of your app registration.

Once that is done you need to create the following GitHub Secrets in your repository as created above to ensure that your GitHub pipelines know where to deploy. Notice that you do not need a Client Secret anymore — which is great.

After this is done you can trigger manually trigger the deploy-infrastructure pipeline to create your Azure resources.

Next you can perform the first deployment of the calculator app by either changing the source code or manually triggering the deploy-full-blue-green pipeline.

Now that we have a blue version of our application up and running let’s take a look at the 300 lines of shell script ( you have to learn bash sooner or later anyway 🤣 )which make up the deployment process. If you do not want to use GitHub pipelines you can trigger the same process from you local machine by triggering the deploy.sh script with the following parameters:

bash ./deploy.sh $RESOURCE_GROUP $IMAGE_TAG $CONTAINER_REPOSITORYThe principle of blue/green deployments means that instead of replacing the previous revision (here we refer to this revision as blue), we bring up the new version (here referred to as the green revision) next to the existing version, but not expose it to the actual users right away. On the condition of having successfully validated that the green revision works correctly, we will promote this revision to the public version by changing the routing configuration without downtime. If something is wrong with the green revision we can revert back without users every noticing interruptions.

Since Azure Container apps allows you to deploy multiple revisions at the same time and control via api which revision is receiving which relative amount of the incoming traffic the necessary technical features are already part of the platform.

In the initial deployment we need to ensure that the revision mode is set to multiple, which means that we can control how many revisions are active and which are receiving production traffic. The opposite would be single in which exactly one revision (the latest one that has been activated) is receiving production traffic. While it make sense for some scenarios to have the platform make the switch between different revisions automatically we want an extra level on control over the production traffic.

With multiple revisions we also get a dedicated fqdn for each revision which allows us to warm up and validate the new revision privately without our customers noticing. In the screenshot below you can see that the default traffic split for each new revision is 0, which ensures that all traffic will continue to be routed to the existing old production revision.

In case we decide that our revision is not performing as expected we can quietly deactivate the revision again and start over with the next release. You can create a very large number of revisions for each Container App so it is absolutely fine to use this concept for all deployments.

After we have validated the new version we can slowly increase the traffic on our new green revision for production traffic.

Just in case that the new release does not respond as expected we can revert the traffic split and deactivate our new release without the users ever noticing the broken release.

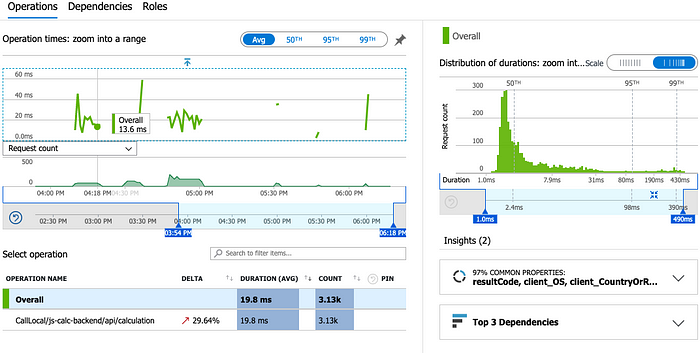

The usage of Application Insights is very helpful because it allows us to track not only the performance and duration of all calls that are made from the frontend to the backend but also the calls that are made from the frontend to the Redis Cache. If we watch the Live Metrics blade we also watch the blue/green switch in realtime and validate that there was indeed no outage during the switch from the old release to the new one.

If you have seen the shell script there are some extra checks to make sure that there are never more than two active revisions and the usage of public ips for the backend service. In theory it is not required to expose the backend service publicly, but since we do not have private access to the VNET from our GitHub pipeline this is a decision to make the demo work smoothly.

Container Apps also has the nice ability to ensure the right amount of replicas of our Apps by using a scaling rule. In this case we want to ensure that each replica does not receive more than 10 concurrent requests per second. We could also allow the platform to scale down the number of replicas to 0 and thereby save costs when absolutely no traffic is being received, but this would impact our ability to roll back without downtime, which is why in this case we only allow to scale down to one replica.

scale:

minReplicas: 1 # change to 0 if you want

maxReplicas: 4

rules:

- name: httprule

custom:

type: http

metadata:

concurrentRequests: 10Both the frontend and the backend container are making use of Dapr sidecars which are part of the platform. The primary benefit is that we can make use of Azure managed services like Azure Redis, Application Insights and KeyVault without hard wiring the code to Azure SDKs. This makes our application easier to test (locally without Azure) or maybe even run the containers in some other cloud or service. Every interaction between our frontend and backend and the Azure platform goes through the Dapr sidecars and all we have to do is enable Dapr and make sure that the Urls are referencing the Dapr sidecar ports (in this case 3500).

We are also implementing the service to service interaction between frontend and backend by using the Dapr sidecars. Both our apps have a dedicated dapr-app-id which we can use to call into the backend api from the frontend container using the following url:

http://localhost:3500/v1.0/invoke/js-calc-backend/method/DoSomeThing

Another nice side effect of activating the Dapr sidecars is that we will also receive distributed tracing information for all interactions between our services and the azure resources that Dapr is interacting with.

At the very end of the deployment script we are also creating release annotations after each successful deployment to ensure easy comparison of performance metrics and error data in our Application Insights dashboards.

This small example has hopefully shown you that the new Azure Container Apps is a very powerful platform based on relatively simple concepts. If you need no-downtime continuous application upgrades and compare the infrastructure efforts and concepts for achieving something similar on Kubernetes maybe you will be excited with the new services.

As always I am very interested in you feedback or pull-requests on the repo for possible improvements.

— — The End (for now)